I was studying reinforcement learning a while ago, attempting to educate myself about deep Q learning. As part of that effort, I read through the first few chapters of Reinforcement Learning: An A Introduction by Sutton and Barto. Here are my notes on Chapter 4. Like all of my other notes, these were never intended to be shared, so apologies in advance if they make no sense to anyone.

Chapter 4

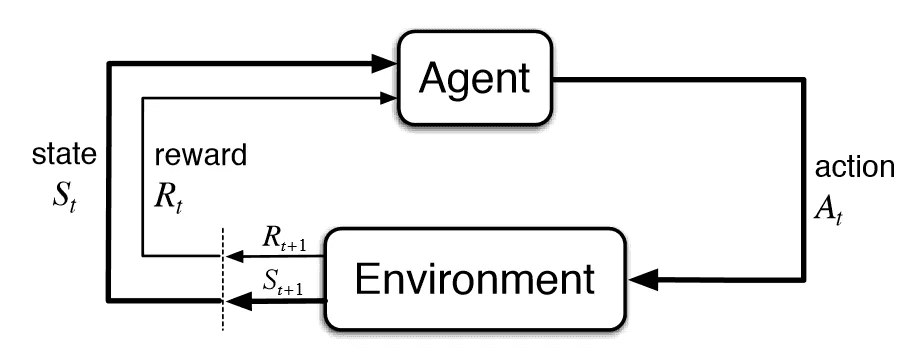

This chapter is all about dynamic programming, which is exactly what you'd think, although the treatment here is simultaneously more abstract than the typical CS undergrad's view of solving coding problems, and also very specific to solving tabular MDPs. There is not a lot of practical information in this chapter about solving real problems, but it is worth the discussion for the theoretical backbone.

Policy Evaluation

Let be an arbitrary policy. Recall that by definition, . That expands to the Bellman equation for .

This relationship between and itself allows us to update its values iteratively:

This process of assigning a value function to a policy is called policy evaluation (get it?).

Policy Improvement

Now let's flip the problem. Suppose we have a policy and a resulting value function . Note this does not mean is greedy with respect to . It may be possible that some action-value q values contradict the policy. For example, suppose is nearly uniform for actions at a state , and the action with the highest action-value as a relatively low value. Then it is possible that . If that is the case, then the policy defined by selecting at state and agreeing with elsewhere is strictly better than (in the sense that its expected return is greater).

Policy Iteration

Thus, once a value function has been defined from a policy, we can improve the policy by replacing it with the greedy policy with respect to that value function. Iterating this two-step process of evaluation followed by improvement is known as policy iteration:

This feels eerily similar to Expectation-maximization algorithms in statistics, about which I know almost nothing.

Other topics in this chapter

There are other topics, mostly related to the observation that the above algorithm involves repeated full scans of the state-action space at every step. Notably, the authors assert without proof that Bellman updates like the above can be done in piecemeal fashion and will still converge to optimal value-policy pairs.